Sitemaps and Robots are one of the most common SEO tools, and one that is it surprisingly easy to do poorly, particularly when using a CMS.

As a developer, it is important to consider how SEO should influence your coding, because coming back to try and rework your website to better suit SEO requirements after it's been released can be a real headache.

Content Management Systems such as Umbraco are particularly prone to these kinds of issues – as pages can be added and deleted frequently, and often a lazy approach, such crawling your site once and then adding a sitemap.xml file to your server, doesn’t account for all the changes that occur afterwards (unless you are a very diligent web developer that updates the file).

Why is this a problem?

Static files can get you by if you have a very simple website, but they certainly aren’t ideal, because over time they become outdated as change occurs in the CMS. When you add another site to your Umbraco, all of a sudden this goes from a mild inconvenience, one that you can say “eh, I’ll deal with that problem later”, to a very significant problem. Every site that you have on that website has the same xml sitemap, and the same robots.

Even if you are a little better than that and create a node on your site to hold the sitemap, when you add a new site to Umbraco, it can be very easy to write your code such that it doesn’t intelligently get the site that is being requested, and instead just grab the first root node. This means all over your site you can grab the incorrect site, and add the wrong link or display the wrong content. If you are hardcoding node ids anywhere in your site, you are susceptible to this kind of unexpected behaviour.

So how can provide a way for the site admin to change the site and have it reflected on the sitemap?

Dynamically Create your Files on Request

Umbraco makes a point of being hands-off. They make sure that if you want to do something, they don’t get in your way. This means that you can create your own routes without having to try and circumvent or hack your way through their CMS to achieve your custom requirements. This means that Umbraco will happily work alongside your own MVC routes!

Step 1: Create your Controller

public class SeoController : Controller { public SeoController() { } public ActionResult Sitemap() { return Content("Hello Sitemap"); } public ActionResult Robots() { return Content("Hello Robots"); } }

Step 2: Add Custom Global.asax.cs

public class Global : UmbracoApplication

{

protected override void OnApplicationStarted(object sender, EventArgs e)

{

base.OnApplicationStarted(sender, e);

}

}

Note: It is important at this stage to open the global.asax file and change the inherits and add a CodeBehind reference

<%@ Application CodeBehind="Global.asax.cs" Inherits="MyApp.Site.Global" Language="C#" %>

Step 3: Add Custom Routes

protected override void OnApplicationStarted(object sender, EventArgs e)

{

RouteTable.Routes.MapRoute(

"sitemap", // name of the route

"sitemap.xml", //path

new

{

controller = "Seo",

action = "Sitemap"

});

RouteTable.Routes.MapRoute(

"robots",

"robots.txt",

new

{

controller = "Seo",

action = "Robots"

});

base.OnApplicationStarted(sender, e);

}

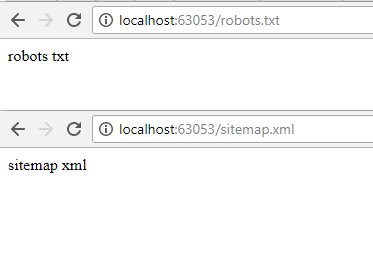

Now to test that our new endpoints work we can hit /sitemap.xml and /robots.txt and we can see we have our routes configured correctly.

Problem: We have no UmbracoContext

This means that we have no idea about the content tree – this doesn’t get us anywhere!

Step 4: Create your own Umbraco Context and Grab the Root Node

public class SeoController : Controller

{

public ActionResult Sitemap()

{

//we use the httpcontext that comes with the controller

_context = UmbracoContext.EnsureContext(

HttpContext,

ApplicationContext.Current,

new WebSecurity(HttpContext, ApplicationContext.Current),

UmbracoConfig.For.UmbracoSettings(),

UrlProviderResolver.Current.Providers,

false);

var rootUrl = Request.Url.GetLeftPart(UriPartial.Authority);

var rootNode = new UmbracoHelper(context).TypedContentAtRoot().FirstOrDefault(x => x.UrlAbsolute().Contains(rootUrl));

return Content("Hello Sitemap", "text/xml")

}

}

Note: It is best practice here to use the context that we receive from the EnsureContext() call, rather than reference the singleton. Source

Step 5: Generate Xml

public ActionResult Sitemap()

{

//we use the httpcontext that comes with the controller

_context = UmbracoContext.EnsureContext(

HttpContext,

ApplicationContext.Current,

new WebSecurity(HttpContext, ApplicationContext.Current),

UmbracoConfig.For.UmbracoSettings(),

UrlProviderResolver.Current.Providers,

false);

var rootUrl = Request.Url.GetLeftPart(UriPartial.Authority);

var rootNode = new UmbracoHelper(_context).TypedContentAtRoot().FirstOrDefault(x => x.UrlAbsolute().Contains(rootUrl));

var ns = XNamespace.Get("http://www.sitemaps.org/schemas/sitemap/0.9");

var xml = new XDocument()

{

Declaration = new XDeclaration("1.0", "UTF-8", null)

};

if (rootNode != null)

{

var rootElement = new XElement(ns + "urlset");

foreach (var item in rootNode.DescendantsOrSelf().Where(x => x.TemplateId != 0))

{

var location = new XElement(ns + "loc", item.UrlAbsolute());

var lastModified = new XElement(ns + "lastmod", item.UpdateDate.ToUniversalTime());

var element = new XElement(ns + "url");

element.Add(location);

element.Add(lastModified);

rootElement.Add(element);

}

xml.Add(rootElement);

}

return Content(xml.ToString(), "text/xml");

}

Now whenever content changes on any of the sites, the sitemap will reflect that change instantly!

Step 6: Robots

Robots is much easier – as you can provide a textarea where they can manage the robots for that site specifically – no hassles, just grab out the text and add it to your response

public ActionResult Robots() { //we use the httpcontext that comes with the controller _context = UmbracoContext.EnsureContext( HttpContext, ApplicationContext.Current, new WebSecurity(HttpContext, ApplicationContext.Current), UmbracoConfig.For.UmbracoSettings(), UrlProviderResolver.Current.Providers, false); var rootUrl = Request.Url.GetLeftPart(UriPartial.Authority); var rootNode = new UmbracoHelper(_context).TypedContentAtRoot().FirstOrDefault(x => x.UrlAbsolute().Contains(rootUrl)); //get robots field from home node var robots = rootNode.GetPropertyValue("robots"); return Content(robots, "text/plain"); }

This is just one example of how you could achieve this outcome, but this method is clean and works no matter how many sites you add to Umbraco.

***

Mudbath is a 40+ person digital product agency based in Newcastle, NSW. We research, design and develop products for industry leaders - including websites built with Umbraco CMS!